Learnings from the AI & Gender Conference

Learnings from the AI & Gender Conference

From Young Women Lead

- Information

- Online safety

About the conference

On Saturday 14th February 2026, we invited both professionals and young women to learn how AI impacts young women and girls, and what we can do now to shape its regulation and use.

The event was hosted at Old College, the University of Edinburgh, between 10.30am and 4pm.

This event was organised by young women aged 16-25 as part of Young Women Lead.

Whilst it was primarily aimed at young people aged 16+, we welcomed professionals with an interest in digital VAWG or those who work with young people to attend.

The event was a follow up from our Young Women Lead project in 2025, which produced our Guide to AI.

Key learnings from the workshops

‘Exposed’

Safa Yousaf (she/her)

Amina, The Muslim Women’s Resource Centre (Amina MWRC)

This workshop explored intimate image abuse and the impact on Muslim and Minority Ethnic women, including honour based abuse.

Exposed looks to advocate for legal reform as the current law in Scotland only considers intimate image abuse to be images or videos of a sexual nature. This does not include pictures of women without hijab, or with uncovered arms or legs.

These images may seem harmless or make you wonder, ‘what’s the big deal?’ However, sharing them without consent can have serious consequences for these women, often leading to honour-based abuse or even forced marriage.

Key summary

Amina MWRC is an intersectional organisation in Scotland that empowers and supports Muslim and Minority Ethnic (ME) women by serving as a vital link between them and the barriers they face every day. We deliver services across Scotland, both online and in person. Our support includes a national helpline offering free, confidential, culturally sensitive assistance on issues such as domestic abuse, mental health, employability, and visa concerns. We also provide telephone befriending, domestic abuse and financial advocacy, employability assistance, adult learning classes, VAWG prevention campaigns, and weekly creative well-being sessions for women in Glasgow and Dundee.

What does building a feminist AI look like?

Eva Blum-Demontet (she/her)

CHAYN

In this workshop we explored what building a feminist AI can look like – what makes an AI feminist? What ethical considerations do we want to keep in mind? What does feminist design look like?

Key summary

So, what makes a feminist AI? Our group said:

- It would take into consideration opinions from people of different communities

- We would use a mixed workforce to programme it

- It would have ethical funding sources

- It would create eco-friendly alternatives

- It would remove service user bias (eg agreeing with the user all the time)

- It would use trigger warnings and be trauma-informed

- Its purpose would be to support those whose human rights have been neglected.

CHAYN creates tech that heals, not harms. They’ve build their Survivor AI to be trauma-informed and support those impacted by intimate image abuse to remove harmful content.

Eva Blum-Dumontet is Head of Movement Building and Policy at Chayn, an organisation providing online resources to survivors of gender-based violence.

Young women’s Guide to AI

The Young Women’s Movement

In this workshop, we looked at the content and creation of our ‘Guide to AI’, which launched in August 2025, and explored where we can make key interventions to make an online world that’s safer for young women and girls.

Key summary

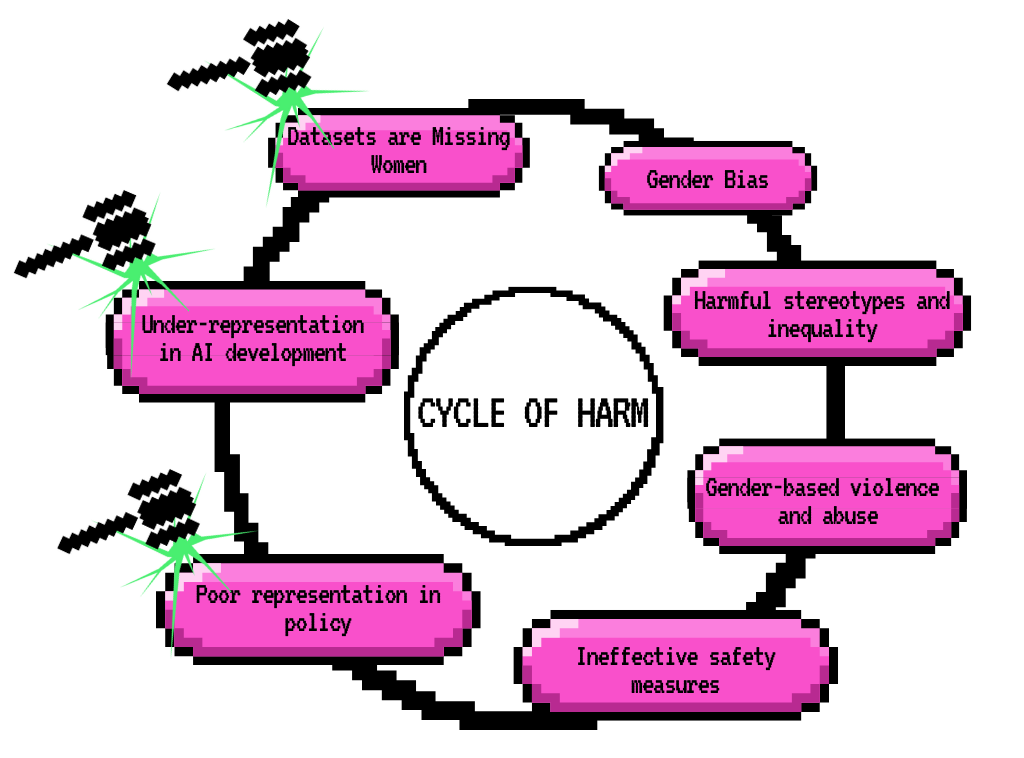

Participants mapped out our cycle of harm, and predicted where key interventions might make a difference for young women

We then asked, What Safety guidelines would you introduce/would like to see introduced to eradicate VAWG in the AI space?

The Young Women’s Movement is Scotland’s national organisation for young women and girls’ leadership and rights. We support young women and girls across Scotland to lead change on issues that matter to them by providing them with resources, networks and platforms to collectively challenge inequality.

When AI platforms sexualise women: Evidence, accountability and what comes next

Risa Kawamura (they/them) and Laura Kaun (she/her)

Center for Countering Digital Hate

Drawing on CCDH’s new research into Grok AI’s generation of sexualised images of women and girls at scale, this workshop invites participants to explore what accountability should look like when gendered harm is not accidental, but designed.

Key summary

Our facilitators asked the question, who is responsible when AI systemically sexualises women and girls at scale?

There are layers. The group felt strongly that individual accountability is still important. One person said, “When someone stabs another person, we don’t blame the knife, we blame the person.” There should be consequences for those who create sexual digital forgeries, and those who share them further.

However, systemic interventions are a longer-term solution.

There have to be consequences for AI platforms which generate forgeries, and for social media platforms where harm is spread. Government, education systems and parents all have a role to play in reducing harm long term.

Professor Clare McGlynn: “Everyone’s finally talking about AI and gender-based violence, but it’s not just Grok”

Clare McGlynn (she/her)

Clare McGlynn is a Professor of Law at Durham University and is an expert on violence against women and girls, particularly online abuse, pornography and sexual violence. She has worked closely with politicians, survivors and women’s organisations to strengthen laws on extreme pornography, cyberflashing and played a key role in developing the new laws combating sexually explicit deepfakes. Her book Exposed – the risk of extreme porn and how we fight back is being published in May 2026 and she’s also the co-author of Cyberflashing: recognising harms, reforming laws (2021) and Image-Based Sexual Abuse: a study on the causes and consequences of non-consensual imagery (2021).

Instagram: @claremcglynn_ LinkedIn: @clare-mcglynn TikTok: @claremcglynn_

Key summary

How did we get here?

- 2017: ‘Deepfakes’ develops new tech to create non-consensual porn. It was celebrities who were targeted first, as early models needed a lot of imagery to create sexual digital forgeries.

- 2018: Campaigners are doing their best to change the law in line with upskirting changes to the law.

- 2021: Campaigners continue their work to raise awareness of the harm of sexual digital forgeries, but the UK government takes the stance that “these images aren’t real.”

- 2023: Nudify apps are being used against children in schools in Spain.

- 2026: We’re finally discussing banning nudify apps, but it’s almost impossible to ban them completely. It’s dual use technology, and difficult to enforce.

What does the future hold?

- AI ‘Smart glasses’ where women are filmed without their consent

- AI Chatbots are designed for engagement to maximise profit. They’re not trauma-informed, and they encourage isolating and suicidal behaviours for vulnerable people. They also enable stalking behaviours by providing detailed instructions to facilitate harm against women and girls.

The examples above aren’t a far off, “black-mirror” future. They’re all emerging technologies that are already causing harm which will only continue to grow in scale.

Panel discussion

Our wonderful panel included:

- Antonia Sewell: Project Officer with Data Education in Schools at the University of Edinburgh, Antonia embeds data science and AI across the primary and secondary curriculum to provide an early introduction to data and statistics concepts.

- Dawn McAra Hunter: A queer, neurodivergent AI ethics researcher and Responsible AI specialist whose work explores how power, identity, and discourse shape the development and governance of artificial intelligence and its impact on marginalised communities.

- Wiktoria Kulik: A Responsible AI Manager at Accenture, supporting organisations across multiple sectors in AI governance and responsible use of technology. She is a former policy advisor at the Centre for Data Ethics and Innovation and previously led Digital Ethics consulting at Sopra Steria.

Insights from the panel

Why is there an AI gender gap?

Dawn McAra Hunter: The AI gender gap is not necessarily bad or to do with lack of education. Women and girls are aware of the harms and are thinking critically about it or exercising caution. It’s a merrygoround of fomo (fear or missing out) – and they seem to be taking time to reflect on whether we need it and whether it’s proportional! More organisations should be considering – is AI really required for this task? More people should use a slow approach instead of rushing to it! It’s not a race.

How do we engage men and boys in thinking about how AI harms women and girls?

Dawn McAra Hunter: AI is intrinsically tied up in toxic masculinity. AI isn’t neutral but it could be, it shouldn’t be masculine.

What we need to do is make AI boring again.

What do you wish people understood about AI?

Wiktoria Kulik: AI is not magic, it’s just really complicated maths. It doesn’t “think.” Underneath all that it’s working from a lot of biased data. It’s great that we’re using it more, because it has the potential to be a really helpful tool, but we need to exercise caution, and not see it as something that will solve all of our problems.

Antonia Sewell: AI is biased, and the reason that AI is biased is because the data it’s trained on is biased. And that’s because the society we live in is biased, and has been for a very long time.

Dawn McAra Hunter: I want people to consider the sustainability of AI, and each time you use it, ask – is this really worth the social and environmental impact?

Young Women Lead gives young women from across Scotland an opportunity to make real change in the lives of young women and girls, while developing their leadership skills, knowledge and confidence.

A huge thanks to our steering group of young women aged 16-25 for their input and hard work. This event would not have happened without them.