Guide to AI: Intersectionality and AI

HOME > RESOURCES & TOOLKITS > THE YOUNG WOMEN’S MOVEMENT GUIDE TO AI > AI AND GENDER

AI AND GENDER

Intersectionality

and AI

TL;DR

Intersectionality is a framework which helps us understand how different systemic inequalities can work together to oppress diversely marginalised people.

There are many studies proving how different AI systems contribute to racism, sexism, etc.

AI development and regulation must incorporate an intersectional approach, at the risk of having the technology of the future become the oppressive force of the present.

What is intersectionality?

Intersectionality, as defined by Kimberlé Crenshaw, is a framework for understanding how overlapping forms of inequality such as racism, sexism, and classism interact to create unique barriers for certain groups. When we apply this lens to Artificial Intelligence and young women, particularly those from marginalised racial, ethnic, and socio-economic backgrounds, the risks of compounded discrimination become clear.

Intersectional bias in AI

As Inga Ulnicane (2024) notes, AI systems can reinforce disadvantages based on gender, race, ethnicity, and other characteristics. This is because AI learns from historical data which often mirrors the inequalities and biases embedded in society. For example, recruitment algorithms have been shown to disadvantage women, penalise candidates who did not attend elite universities (often excluding working-class applicants), and perpetuate racial disparities in hiring. In more extreme cases, facial recognition systems have performed far worse on Black women’s faces than on white men’s, exhibiting an intersectional bias which compounds racial and gendered discrimination, as highlighted by Youjin Kong. This framework extends beyond the academic and has real-world consequences, from wrongful arrests to being denied access to opportunities.

Several studies on computer vision and natural language processing have further exposed how AI encodes and amplifies social biases related to gender, race, ethnicity, sexuality, and other identities. Young and Wajcam (2023) point out that facial recognition software reliably identifies white men but often fails to recognise dark-skinned women, as demonstrated by Buolamwini and Gebru’s 2018 study. In natural language processing, research shows that word embeddings, which are models that learn meaning from large text datasets, reflect human-like gender biases. For instance, Google Translate has been found to default to male pronouns when translating gender-neutral words related to STEM fields (Prates et al., 2019). Examples like these reveal that AI does not simply mirror society but can also perpetuate and aestheticise existing power structures.

In 2022, Pratyusha Ria Kalluri, a graduate student at Stanford University, discovered troubling biases in image-generated AI tools like Stable Diffusion and DALL E. When prompted to create images of an American man with his house, the AI depicted a pale-skinned person in front of a large-colonial style home. But when asked for an African man with a fancy house, it generated a dark-skinned man in front of a simple mud house, despite the word “fancy”. Kalluri and her team found that these AI programs often rely on harmful stereotypes, such as associating poverty with Africa and assigning certain jobs disproportionately to women or BAME (Black, Asian and Minority Ethnic) individuals.

Real world consequences

The limited data availability of intersection data, as discussed by Alan Turing Institute, compounds the problem. Much AI research and policy still treats gender as binary and fails to examine race or other intersecting identities in meaningful depth. This narrow focus, often centering “women in tech” in ways that privileges white women, overlooks the specific barriers faced by LGBTQ+ women, BAME women, disabled women, and those at other intersections of marginalisation. The danger here is twofold, as AI both inherits bias from unequal social systems and amplifies it through automated decision-making at scale.

For instance, ProPublica’s 2016 investigation into the COMPAS risk assessment tool revealed that Black defendants were nearly twice as likely as white defendants to be incorrectly labelled high risk. Similarly, Lum and Isaac’s research on the PredPol predictive policing system demonstrated how biased policing data could create feedback loops that further target Black and Brown neighbourhoods, not because crime rates are higher, but because biased reporting and enforcement make those communities appear more criminal in the dataset.

Amid these systemic issues, campaigns like Exposed, led by Amina, the Muslim Women’s Resource Centre, are crucial. Exposed highlights a form of abuse that is often overlooked: sharing someone’s private photos without their consent. For many Muslim and Black and Minority Ethnic women, even images that are not sexual, like a photo without Hijab or with uncovered arms, can cause serious harm. These pictures can lead to shame, family pressure, honour-based abuse, or even forced marriage. Right now, Scottish law only protects people if the images are sexual, leaving many women with no legal protection. Exposed helps raise awareness and deepen understanding of the real impact this abuse can have, especially within cultural and religious contexts that are often overlooked in broader discussions of image-based abuse.

Looking to the future

While much of the evidence highlights harm, there are glimpses of AI’s potential for good. UNESCO (2020) points to tools that can help employers write gender-sensitive job postings to attract a more diverse workforce, illustrating that AI can support equality when designed intentionally and inclusively. But such outcomes require an explicit commitment to intersectionality in both policy and practice.

As Collect and Dillon (2019) argue, this means drawing on feminist, queer, postcolonial, and critical race theory to understand how AI interacts with existing power structures and to ensure law and policy address not just individual inequalities but their intersections. For young women, especially those who live within the margins, intersectional approaches to AI are essential for building systems that do not silently replicate the very inequalities they promise to solve. Without them, the technology of the future risks becoming the oppression of the present, only faster and more efficient.

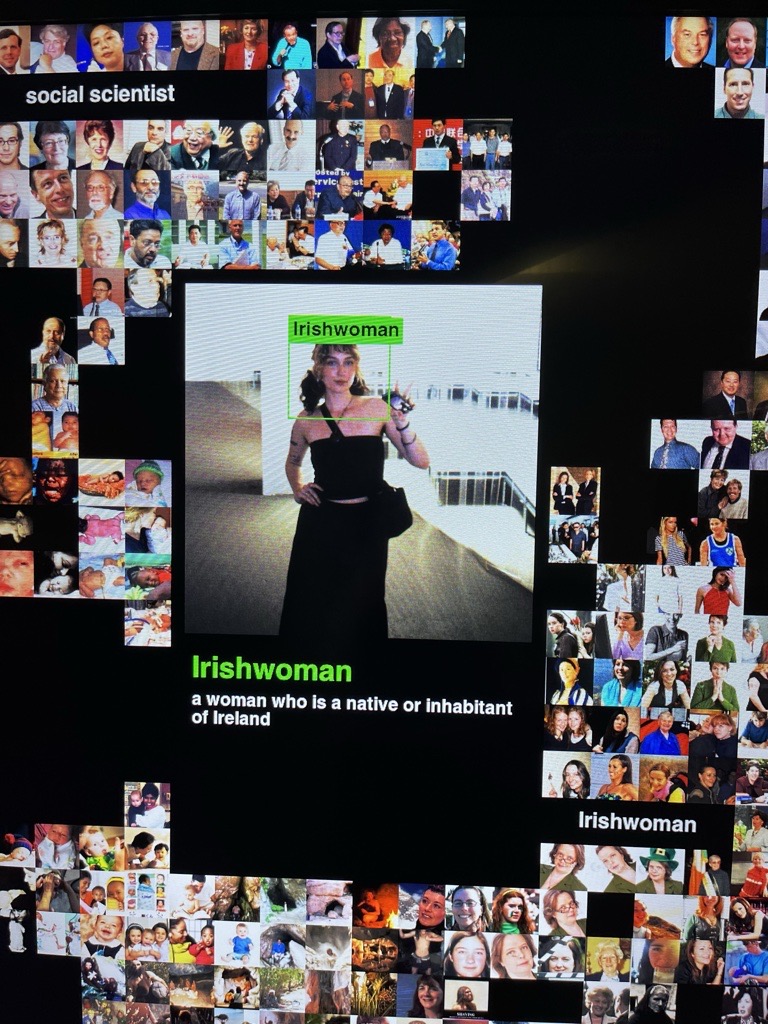

ImageNet Roulette

‘ImageNet Roulette is trained on the “person” categories from a dataset called ImageNet (developed at Princeton and Stanford Universities in 2009), one of the most widely used training sets in machine learning research and development.

The project was a provocation, acting as a window into some of the racist, misogynistic, cruel, and simply absurd categorizations embedded within ImageNet and other training sets that AI models are build upon.’

My project lets the training set “speak for itself,” and in doing so, highlights why classifying people in this way is unscientific at best, and deeply harmful at worst.

Trevor Paglen, Artist

References

Ulnicane, I. (2024). Intersectionality in Artificial Intelligence: Framing Concerns and Recommendations for Action. Social Inclusion,12(0). doi:https://doi.org/10.17645/si.7543

Young, E.S., Wajcman, J. and Sprejer, L. (2023). Mind the gender gap: Inequalities in the emergent professions of artificial intelligence (AI) and data science. New Technology Work and Employment, 38(3). doi: https://onlinelibrary.wiley.com/doi/10.1111/ntwe.12278

Prates, M.O.R., Avelar, P.H. and Lamb, L.C. (2019). Assessing Gender Bias in Machine Translation: A Case Study with Google Translate. Neural Computing and Applications, 32(10). doi:https://doi.org/10.1007/s00521-019-04144-6

Angwin, J., Larson, J., Mattu, S. and Kirchner, L. (2016). Machine Bias. ProPublica. Available at: https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing

Hulick, K. (2024). AI image generators tend to exaggerate stereotypes. ScienceNewsExplores. Available at: https://www.snexplores.org/article/ai-image-generators-bias

The Alan Turing Institute. (2023). Women in Data Science and AI. Available at: https://www.turing.ac.uk/research/research-programmes/public-policy/public-policy-themes/women-data-science-and-ai

UNESCO (2020) “Artificial Intelligence and gender equality: key findings of UNESCO’s Global Dialogue”. Paris: UNESCO. Available at: https://unesdoc.unesco.org/ark:/48223/pf0000374174/

Dillon, S. and Collett, C. (2019). AI and Gender: Four Proposals for Future Research. Apollo – University of Cambridge Repository. doi:https://doi.org/10.17863/cam.41459